Data center migration to DDR5 may be more important than other upgrades. However, many people just vaguely think that DDR5 is just a transition to completely replace DDR4. Processors inevitably change with the arrival of DDR5, and they will have some new memory interfaces, as was the case with previous generations of DRAM upgrades from SDRAM to DDR4.

However, DDR5 is not just an interface change, it is changing the concept of processor memory system. In fact, the changes to DDR5 may be enough to justify an upgrade to a compatible server platform.

Why choose a new memory interface?

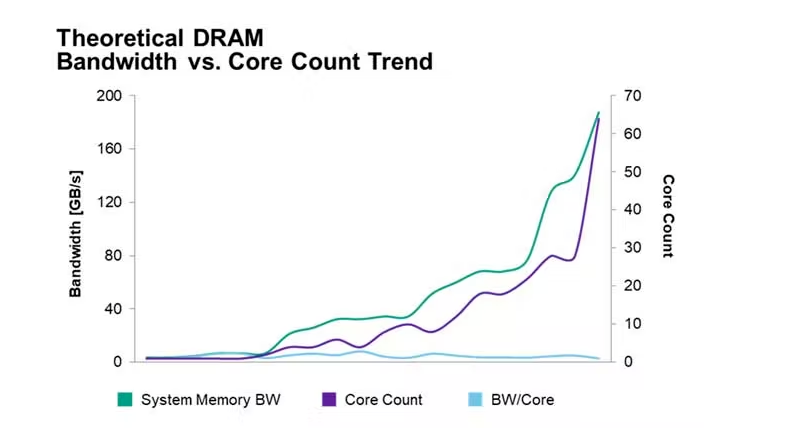

Computing problems have grown more complex since the advent of computers, and this inevitable growth has driven evolution in the form of greater numbers of servers, ever-increasing memory and storage capacities, and higher processor clock speeds and core counts , but also driving architectural changes, including the recent adoption of disaggregated and implemented AI techniques.

Some might think that these are all happening in tandem because all the numbers are going up. However, while the number of processor cores has increased, DDR bandwidth has not kept pace, so the bandwidth per core has actually been decreasing.

Since data sets have been expanding, especially for HPC, games, video coding, machine learning reasoning, big data analysis, and databases, although the bandwidth of memory transfers can be improved by adding more memory channels to the CPU, But this consumes more power. The processor pin count also limits the sustainability of this approach, and the number of channels cannot increase forever.

Some applications, especially high-core subsystems such as GPUs and specialized AI processors, use a type of high-bandwidth memory (HBM). The technology runs data from stacked DRAM chips to the processor through 1024-bit memory lanes, making it a great solution for memory-intensive applications like AI. In these applications, the processor and memory need to be as close as possible to provide fast transfers. However, it’s also more expensive, and the chips can’t fit on replaceable/upgradeable modules.

And DDR5 memory, which began to be widely rolled out this year, is designed to improve the channel bandwidth between the processor and the memory, while still supporting upgradeability.

Bandwidth and latency

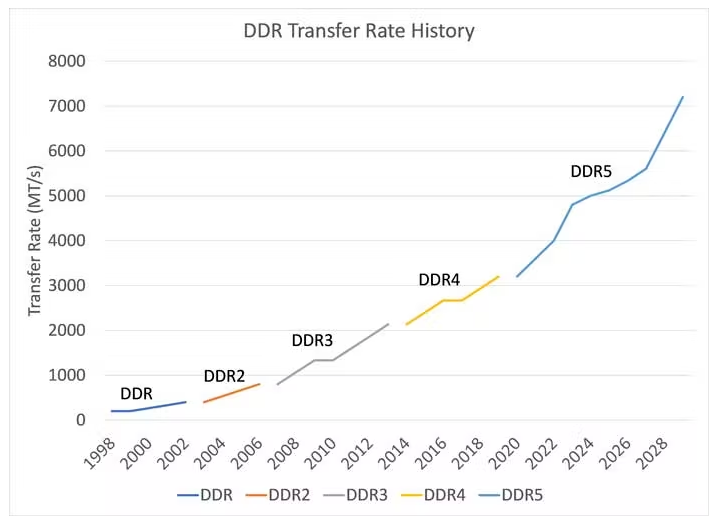

The transfer rate of DDR5 is faster than that of any previous generation of DDR, in fact, compared to DDR4, the transfer rate of DDR5 is more than double. DDR5 also introduces additional architectural changes to enable performance at these transfer rates over simple gains and will improve the observed data bus efficiency.

Additionally, the burst length was doubled from BL8 to BL16, allowing each module to have two independent sub-channels and essentially doubling the available channels in the system. Not only do you get higher transfer speeds, but you also get a rebuilt memory channel that outperforms DDR4 even without higher transfer rates.

Memory-intensive processes will see a huge boost from the transition to DDR5, and many of today’s data-intensive workloads, especially AI, databases, and online transaction processing (OLTP), fit this description.

The transmission rate is also very important. The current speed range of DDR5 memory is 4800~6400MT/s. As the technology matures, the transmission rate is expected to be higher.

Energy consumption

DDR5 uses a lower voltage than DDR4, i.e. 1.1V instead of 1.2V. While an 8% difference may not sound like much, the difference becomes apparent when they are squared to calculate the power consumption ratio, i.e. 1.1²/1.2² = 85%, which translates to a 15% saving on electricity bills.

The architectural changes introduced by DDR5 optimize bandwidth efficiency and higher transfer rates, however, these numbers are difficult to quantify without measuring the exact application environment in which the technology is used. But then again, due to the improved architecture and higher transfer rates, the end user will perceive an improvement in energy per bit of data.

In addition, the DIMM module can also adjust the voltage by itself, which can reduce the need for adjustment of the power supply of the motherboard, thereby providing additional energy-saving effects.

For data centers, how much power a server consumes and how much cooling costs are concerns, and when these factors are considered, DDR5 as a more energy-efficient module can certainly be a reason to upgrade.

Error correction

DDR5 also incorporates on-chip error correction, and as DRAM processes continue to shrink, many users are concerned about increasing the single-bit error rate and overall data integrity.

For server applications, on-chip ECC corrects single-bit errors during read commands before outputting data from DDR5. This offloads some of the ECC burden from the system correction algorithm to DRAM to reduce the load on the system.

DDR5 also introduces error checking and sanitization, and if enabled, DRAM devices will read internal data and write back corrected data.

Summarize

While the DRAM interface is usually not the first factor a data center considers when implementing an upgrade, DDR5 deserves a closer look, as the technology promises to save power while greatly improving performance.

DDR5 is an enabling technology that helps early adopters migrate gracefully to the composable, scalable data center of the future. IT and business leaders should evaluate DDR5 and determine how and when to migrate from DDR4 to DDR5 to complete their data center transformation plans.

Post time: Dec-15-2022